How to build a

robot that feels

J.Kevin O'Regan

Talk given at CogSys

2010 at ETH Zurich on 27/1/2010

Overview. Consciousness is often considered to have a

"hard" part and a not-so-hard part. With the help of work in

artificial intelligence and more recently in embodied robotics, there is hope

that we shall be able solve the not-so-hard part and make artificial agents

that understand their environment, communicate with their friends, and most

importantly, have a notion of "self" and "others". But will

such agents feel anything? Building the feel into the agent will be the

"hard" part.

I shall explain how action provides a solution. Taking

the stance that feel is a way of acting in the world provides a way of

accounting for what has been considered the mystery of "qualia",

namely why they are very difficult to describe to others and even to oneself,

why they can nevertheless be compared and contrasted, and, most important, why

there is something it's like to experience them: that is, why they have phenomenal

"presence".

As an application of this approach to the phenomenal

aspect of consciousness, I shall show how it explains why colors are the way

they are, that is, why they are experienced as colors rather than say sounds or

smells, and why for example the color red looks red to us, rather than looking

green, say, or feeling like the sound of a bell.

-

When Arnold Schwartzenegger, playing the role of a very

advanced robot in the film Terminator ends up being consumed in a bath of

burning oil and fire, he goes on steadfastly till the last, fighting to protect

his human friends. As a very intelligent robot, able to communicate and reason,

he knows that what's happening to him is a BAD THING, but he doesnŐt FEEL THE

PAIN.

This is the classic view of robots today: people believe

that robots could be very sophisticated, able to speak, understand, and even

have the notion of "self" and use the word "I"

appropriately. But as humans we have difficulty accepting the idea that robots

should ever be able to FEEL anything. After all, they are mere MACHINES!

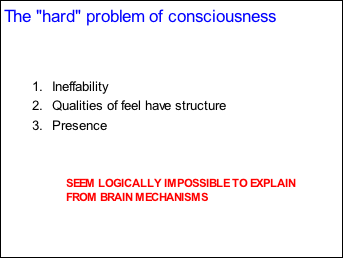

Philosophers also have difficulty with the problem of FEEL,

which they often refer to as the problem of QUALIA, that is, the perceived

quality of sensory experience, the basic "what it's like" of say,

red, or the touch of a feather, or the prick of a pin. Understanding qualia or

feel is what the philosopher David Chalmers and what Daniel Dennett call: the

"hard problem" of consciousness.

-

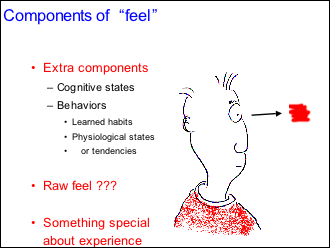

Let's try and look at what is so difficult about

understanding feel. The first step would have to be to try to define what we

really mean when we talk about feel.

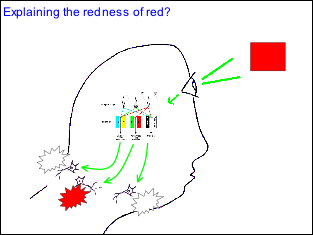

Suppose I look at a red patch of color: I see red. What

exactly is this feel of red? What do I experience when I feel the feel of red?

I would say that first of all there are cognitive states : mental associations like roses, ketchup, blood,

red traffic lights, and knowledge about how red is related to other colors: for

example that it's similar to pink and quite different from green. Other

cognitive states are the thoughts and linguistic utterances that seeing redness

provoke, as well as the plans, decisions, opinions or desires that seeing

redness may give rise to.

Now surely all this can be built into a robot. Perhaps

today, not yet in such a sophisticated fashion as with humans. But in the

future, since symbolic processing cognitive processing are the very subject

matter of Artificial Intelligence, having cognitive states like this is within

the realm of robotics. So presumably here there is no logical problem.

Behavioral reactions are a second aspect of what it is like

to have a feel. There are automatic reactions like a good driver pressing on

the brake at a red traffic light when I drive. There may be physiological

tendencies involved in seeing red: perhaps redness changes my general physical

state, making me more excited as compared to what happens when I gaze at a cool

blue sky.

Both automatic bodily reactions and physiological tendences,

to the extent that the robot has a body and can be wired up so as to react

appropriately, should not be too difficult to build into the robot. So here too

there is no logical problem, although it may take us a few more decades to get

that far.

-

But the trouble is all these components of feel seem to most

people not to constitute the RAW feel of red itself. Most people will say that

they are CAUSED by the fact that we feel red. They are extra components,

products, or add-ons of something that most people think exists, namely the

primal, raw feel of red itself, which is

at the core of what happens when I look at a red patch of colour.

Does raw feel really exist? Certainly many people would say

that we have the impression it exists

since otherwise there would be "nothing it's like" to have

sensations. We would be mere machines or "zombies", empty vessels

making movements and reacting with our outside environments, but there would be

no inside "feel" to anything.

Other people, most notably the philosopher Daniel Dennett,

considers that raw feel does not exist, and that it is somehow just a confusion

in our way of thinking.

But the important point is that EVEN IF DENNETT IS RIGHT,

and that raw feel does not really exist, something still needs to be explained.

Even Dennett must agree that there is something special about sensory

experience that at least MAKES IT SEEM TO MANY PEOPLE like raw feel exists!

Let me look at what this something special is.

--

Ineffability is really what springs to mind first as being

peculiar about feel: namely the fact that because they are essentially first

person, ultimately it is impossible to communicate to someone else what feels

are like.

I remember as a child asking my mother what a headache was

like and never getting a satisfactory answer until the day I actually got one.

Many people have asked themselves whether

their spouse or companion see colors the same way as they do!

This ineffability has led many people to conclude that an

EXTRA THEORETICAL APPARATUS will

be needed to solve the problem of the "what-it-is-like" of experience.

This ineffability of raw feels is a first critical aspect of

raw feels that we need to explain.

--

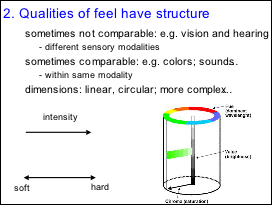

But even if we can't describe feels, there is one thing we

know, namely that they are all different from one another.

Sometimes this difference allows for no comparison. Vision

and hearing are different. How are they different? Difficult to sayÉ For

example there seems to be no basis for comparing the red of red with the sound

of a bell. Or the smell of an onion with the touch of a feather. When in this

way sensations can't be compared, we say that they belong to different

modalities: vision, hearing, touch, smell, taste...

But within sensory

modalities, experiences can be

compared, or at least structured. Austen Clark in his brilliant book Sensory

Qualities looks at this in detail. For example, we can make comparisons on

comparisons, and observe that for example red is more different from green than

it is from pink.

By compiling such comparisons we can structure sensory

qualities and notice that sometimes they can be conveniently organised into dimensions.

Dimensions can

sometimes be linear going from nothing to infinity as when we go from no

brightness to very very bright, or from complete silence to very very loud.

Sometimes they go from minus infinity to plus infinity, as from very very cold

to very very hot. Sometimes sensations need circular dimensions to describe

them adequately, as when we go from red to orange to yellow to green to blue to

violet and back to red.

Sometimes, as in the case of smell, as many as 30 separate

dimensions seem to be necessary to describe the quality of sensations.

Can such facts be accounted for in terms of

neurophysiological mechanisms in the brain?

The simplest example is something like sound intensity. I

ask you to reflect on this carefully. If we should find that perceived sound

intensity correlates perfectly with neural activation in a particular brain

region, with very strong perceived intensity corresponding to very high neural

activation. At first this seems

like a satisfactory state of affairs, approaching what a neurophysiologist

would conceive of as getting very close to an explanation of perceived sound

intensity. BUT IT SIMPLY IS NOT!!

For WHY should highly active neurons give you the sensation

of a loud sound, whereas little activation corresponds to soft sound?? Neural

activation is simply a CODE. Just pointing out that there is something in

common between the code and the perceived intensity is not an explanation at

all. The code could be exactly the opposite and be a perfectly good code.

--

Let's take the example of color.

Color scientists since Oswald Hering at the end of the 19th Century have known that an important aspect of the perceptual structure of colors is the fact that their hues can be arranged along two dimensions: a red-green axis and a blue yellow axis. Neurophysiologists have indeed localized neural pathways that seem to correspond to these perceptual axes. The trouble is: what is it about the neurons in the red-green channel that give that red or green feeling, whereas neurons in the blue-yellow channel provide that yellow or blue feeling?

Another issue concerns the perceived proximity of colors and

the similarity of corresponding brainstates.

Suppose it turned out that some brainstate produces the raw

feel of red and furthermore, that brain states near that state produce feels that are very near

to red. This could happen in a lawful way

that actually corresponds to people's judgments about the proximity of red to

other colors.

The trouble is, what do you mean by saying a brainstate is

near another brainstate? Brain states are activities of millions of neurons,

and there is no single way of saying this brain state is "more in the

direction" of another brain state. Furthermore, even if we do find some

way of ordering the brain states so that their similarities correspond to

perceptual judgments about similarities between colors, then we can always ask:

why is it this way of ordering the brain

states, rather than that, which

predicts sensory judgments?))

So in summary concerning the structure of feels: this is a

second critical aspect of feels we need to explain.

-

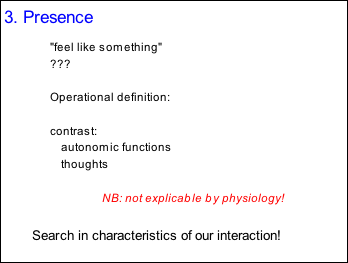

And now for what philosophers consider to be perhaps the

most mysterious thing about feels. They "feel like something", rather

than feeling like nothing. We all believe that there is "nothing it's

like" to a mere machine to capture a video image of red, whereas we really

have the impression of seeing redness. Still, though it does somehow ring true

to say there is this kind of "presence" to sensory stimulation, the

notion is elusive. It would be nice to have an operational definition. Perhaps

a way to proceed is by contradiction.

--

Consider the fact that your brain is continually monitoring

the level of oxygen and carbon dioxide in your blood. It is keeping your

heartbeat steady and controlling other bodily functions like your liver and

kidneys. All these activities involve biological sensors that register the

levels of various chemicals in your body. These sensors signal their

measurements via neural circuits and are processed by the brain. And yet this

neural processing has a very different status than the pain of the needle prick

or the redness of the light: Essentially whereas you feel the pain and the redness, you do

not feel any of the goings-on that determine internal functions like the oxygen

level in your blood. The needle prick and the redness of the light are

perceptually present to you, whereas states measured by other sensors in your

body also cause brain activity but generate no such sensory presence.

You may not have previously reflected on this difference,

but once you do, you realize that there is a profound mystery here. Why should

brain processes involved in processing input from certain sensors (namely the

eyes, the ears, etc.), give rise to a felt sensation, whereas other brain

processes, deriving from other senses (namely those measuring blood oxygen

levels etc.) do not give rise to a felt sensation?

But what about thinking or imagining?

Clearly, like the situation for sensory inputs, you are aware of your thoughts, in the sense that you know what

you are thinking about or imagining, and you can, to a large degree, control

your thoughts. But being aware of

something in this sense of "aware" does not imply that that thing has

a feel. Indeed I suggest that as concerns what they feel like, thoughts are

more like blood oxygen levels than like sensory inputs: thoughts are not

associated with any kind of sensory presence. Your thoughts do not present

themselves to you as having a particular sensory quality. A thought is a

thought, and does not come in different sensory shades in the way that a color

does (e.g. red and pink and blue), nor does it come in different intensities

like a light or a smell or a touch or a sound might.

To conclude: perhaps a first step toward finding an

operational definition of what we mean by "raw feels feel like

something" is to note that this statement is being made in contrast with

brain processes that govern bodily functions, and in contrast with thoughts or

imaginings: neither of these impose themselves on us like sensory feels, which

are "real" or "present".

This is mystery number three concerning raw feel.

--

To summarize then: we have three characteristics of raw feel

which are mysterious and seem not to be able to be explained from

physico-chemical mechanisms.

This is the hard problem of consciousness.

--

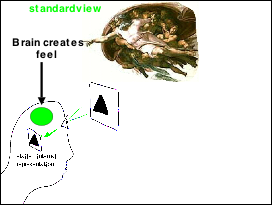

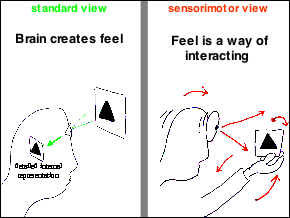

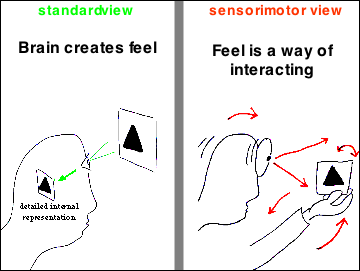

Under the view that there is something in the brain which generates "feel", we are always led to an infinite regress of questions, and ultimately we are left to invoking some kind of dualistic magic in order to account for the what it's like of feel.

--

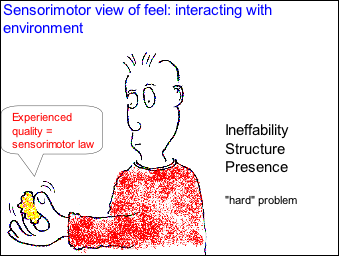

But there is a different view of what feel is which eliminates the infinite regress. This "sensorimotor" view takes the stance that it is an error to think of feels as being the kind of thing that is generated by some physical mechanism, and a fortiori then, it is an error to look in the brain for something that might be generating feel.

Instead the sensorimotor view suggests that we should think of feel in a new way, namely as a way of interacting with the world.

--

This may not make very much sense at first, so let's take a

concrete example, namely the example of softness.

Where is the softness of a sponge generated? If you think

about it, you realize that this question is ill posed. The softness of the

sponge is surely not the kind of thing that is generated anywhere! Rather, the

softness of the sponge is a quality of the way we interact with sponges. When

you press on the sponge, it cedes under our pressure. What we mean by softness

is that fact.

Note that the quality of softness is not about what we are

doing right now with the sponge. It's a

fact about the potentialities that our interaction with the sponge present to

us. It's something about the various things we could do if we wanted to.

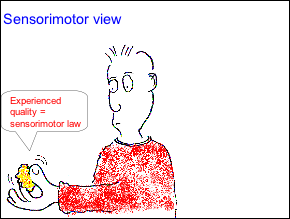

So summarizing about the quality of feels: the sensorimotor

view takes the stance that the quality of a feel is constituted by the law of sensorimotor

interaction that is being obeyed as we interact with the environment.

--

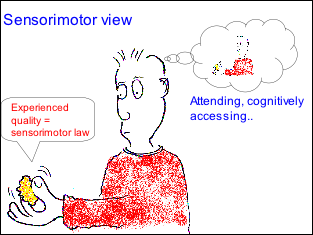

But note that something more is needed. It is not sufficient

to just be engaged in a sensorimotor interaction with the world for one to be

experiencing a feel. We need additionally to be attending, cognitively

accessing the fact that we are engaged in this way. I'll be coming back to what

this involves at the end of the talk. For the moment I want to concentrate on

the quality of the feel, and leave to the side the question of what makes the

feel "experienced" by the person.

Let's look at how taking the sensorimotor view explains the

three mysteries of feel that I've defined earlier: the ineffability, the

structure, and the presence.

--

Ineffability:

Obviously when you squish a sponge there are all sorts of

muscles you use and all sorts of things that happen as the sponge squishes

under your pressure. It is inconceivable for you to have cognitive access to

all these details. It's also a bit like when you execute a practised skiing

manoeuver, or when you whistle: you don't really know what you do with your

various muslces, you just do the right thing.

The precise laws of the sm interaction are thus ineffable,

they are not available to you, nor can you describe them to other people.

Applied to feels in general, we can understand that the

ineffability of feels is therefore a natural consequence of thinking about

feels in terms of ways of interacting with the environment. Feels are qualities

of actually occurring sensorimotor interactions which we are currently engaged

in. We do not have cognitive access to each and every aspect of these

interactions.

--

Qualities have structure.

Now let's see how the sponge analogy deals with the second

mystery of feel, namely the fact that feels are sometimes comparable and

sometimes not, and that when they are comparable, they can sometimes be

compared along different kinds of dimensions.

Let's take sponge squishing and whistling as examples.

The first thing to notice is that there is little

objectively in common between the modes of interaction constituted by sponge

squishing and by whistling.

On the other hand there is clear structure WITHIN the

gamut of variations of sponge-squishing: some things are easy to squish, and

other things are hard to squish. There is a continuous dimension of softness.

Furthermore, what we mean by softness is the opposite of

what we mean by harndess. So one can establish a continuous linear dimension

going from very soft to very hard.

So here we have examples that are very reminiscent of what

we noticed about raw feels: sometimes comparisons are nonsensical, as between

sponge squishing and driving, and sometimes they are possible, with dimensions

along which feels can be compared and contrasted.

Whereas we could not explain the differences through

physiology, if we reason in terms of sensorimotor laws, these properties of

feel fall out naturally.

--

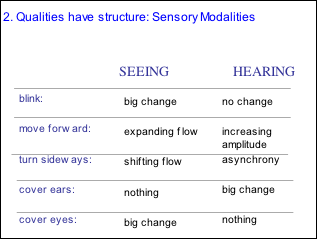

Let's look at some applications of these ideas to real raw

feels.

If I'm right about the qualities of feels, then we can

explain why they are the way they are, not in terms of different brain

mechanisms that are excited, but in interms of the different laws that govern

our interaction with the environment when we have the different feels.

So for example: where lies the difference between hearing

and seeing? It does not lie in the fact that vision excites the visual cortex

and hearing the auditory cortex.

It lies in the fact that when you see and you blink, there

is a big change in sensory input, whereas nothing happens when you are hearing

and you blink.

It lies in the fact that when you see and you move forward,

there is an expanding flow field on your retinas, whereas the change obeys

quite different laws in the auditory nerve.

--

Now if this is really the explanation for differences in the

feel associated with different sensory modalities. Then it makes a prediction:

it predicts that you should be able to see, for example, through the auditory

or through the tactile modality, providing things are arranged such that the

appropriate sensorimotor dependencies are created.

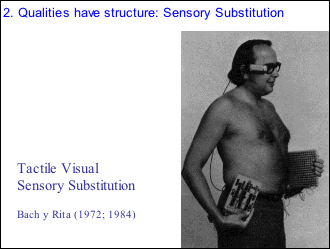

This is the idea of Sensory Substitution. Paul Bach y Rita

in the 1970's had already hooked up a video camera worn by a blind person on

their spectacles through some electronics to an array of 20 by 20 vibrators

that the blind person wore on their stomach or back. He had found that

immediately on using the device, observers were able to navigate around the

room, and had the impression, of an outside world, rather than feelings of

vibration on the skin. With a bit more practice they were able to identify

simple objects in the room. There are reports of blind people referring to the

experience as "seeing".

With modern electronics, sensory substitution is becoming

easier to arrange and a variety of devices are being experimented with.

--

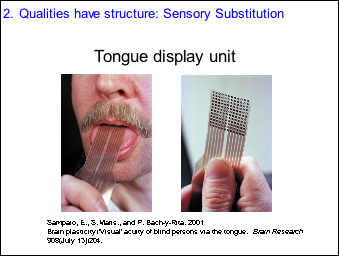

Bach y Rita and his collaborators have developed a tongue

stimulation device which, though it has low resolution, has proven very useful

in substitution vestibular information.

--

There is work being done on Visual to Auditory substitution,

where information from a webcam is translated into a kind of

"soundscape" that can be used to navigate and identify objects. A

link to a movie showing how a subject learns to use such a device is:

http://www.nstu.net/malika-auvray/demos/substitutionsensorielleST3.mpg

--

There is even an application written in collaboration with

Peter Meijer who invented this particular vision-to-sound system that works on

some Nokia phones.

--

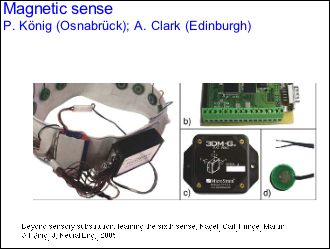

Peter Knig and his group at Osnabrck have been

experimenting with a belt that provides tactile vibrations corresponding to the

direction of north. The device, when worn for several weeks, is, he says,

unconsciously made use of in people's navigation behavior, and becomes a kind

of 6th sense!

In conclusion on this section concerning the structure of

the qualities of feel, we see that the idea that qualities are constituted by

the laws of sensorimotor dependency that characterise the associated

interactions with the world, is an idea that makes interesting predictions that

have been verified as regards sensory substitution.

--

I now come to

another application of the idea, namely to the question of color.

Color is the philosopher's prototype of a sensory quality.

In order to test whether the sensorimotor approach has merit, the best way to

proceed seemed to us to be to see if we could apply it to color.

At first it seems counterintuitive to imagine that color

sensation has something to do with sensorimotor dependencies: after all, the

redness of red is apparent even when one stares at a red surface without moving

at all. But given the benefit, as regards bridging the explanatory gap, of

applying the theory to color, I tried to find a way of conceiving of color in a

way that was "sensorimotor".

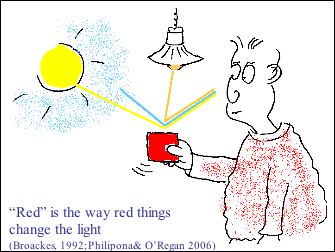

With my doctoral student David Philipona we realized that

this could be done by considering not colored lights, but colored surfaces. Color scientists know that when

you take a red surface, say, and you move it around under different lights, the

light coming into your eyes can change dramatically. For example in an

environment composed mainly of blue light, the reflected light coming off a red

surface can only be blue. There is no red light coming off the surface, and yet

you see it as red.

The explanation for this surprising fact is well known to

color scientists, but not so well known to lay people, who often incorrectly

believe that color has something mainly to do with the wavelength of light

coming into the eyes. In fact what determines whether a surface appears red is

the fact that it absorbs a lot of short wavelength light and reflects a lot of

long wavelength light. But the actual amount of short and long wavelength light

coming into the eye at any moment will be mainly determined by how much there

is in the incoming light, coming from illumination sources.

Thus what really determines

perceived color of a surface is the law that links incoming light to outgoing light. Seeing color then,

involves the brain figuring out what that law is. The obvious way to do this would be by sampling the

actual illumination, sampling the light coming into the eye, and then, based on

a comparison of the two, deducing what the law linking the two is.

--

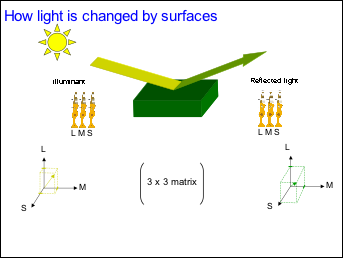

This is illustrated in this figure. The incoming light is

sampled by the three types of photoreceptors in the eye, the L, M and S cones.

Their response can be represented as a vector in a three dimensional space.

When the incoming light bounces off the surface, the surface absorbs part of

it, and reflects the rest. This rest can then be sampled again by the eye's

three photoreceptor cone types, giving rise to another three-vector.

It turns out that the transformation of the incoming three

vector to the outgoing three vector can be very accurately described by a 3 x 3

matrix. This matrix is a property of the surface, and is the same for all light

sources. It constitutes the law that we

are looking for, namely the law that describes how incoming light is

transformed by this surface.

It is very easy to calculate what the 3 x 3 matrices are for

different surfaces. My mathematician David Philipona did this simply by going

onto the web and finding databases of measurements of surface reflectivity,

databases of light spectra (like sun light, lamp light, neon light, etc.) and

figuring out what the matrices were.

Of course human observers, when they judge that a surface is

red donŐt do things this way. One way they could do it is to experiment around

a little bit, moving the surface around under different lights, and

ascertaining what the law is by comparing inputs to outputs. So in that respect

the law can be seen as being a sensorimotor law. In many cases however humans

donŐt need to move the surface around to establish the law: this is probably

because they know more or less already what the incoming illumination is. But

in case of doubt, like when you're in a shop under peculiar lighting, it's

sometimes necessary to go out of the shop with a clothing article to really

know what color it is.

--

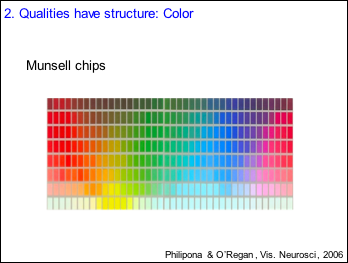

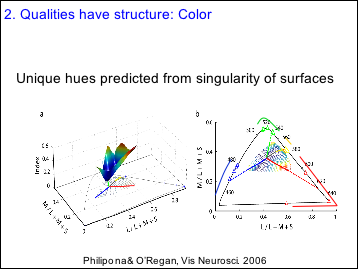

Here are a set of colored chips called Munsell chips which

are often used in color experiments. Their reflectance spectra are available

for download off the web, and we applied David Philipona's method to calculate

the 3 x 3 matrices for all these chips. What did this give? Lots of number,

obviously.

But when we looked more closely at the matrices, we

discovered something very interesting. Some of the matrices had a special

property: they were what is called singular.

What this means is that they have the property that instead of taking input

three vectors and transforming them into output vectors that are spread all

over the three dimensional space of possibilities, these matrices take input

three vectors and transform them into a two dimensional or a one dimensional

subspace of the possible three dimensional output space. In other words these

matrices represent input output laws tht are in some sense simpler than the average run of the mill matrices.

--

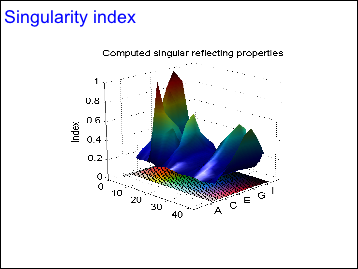

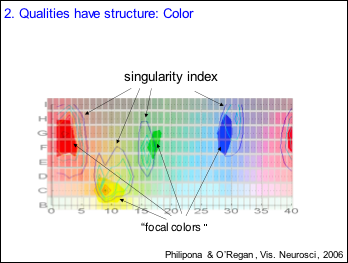

Here is a graph showing the degree of singularity of the

matrices corresponding to the different Munsell chips.

You see that there are essentially four peaks to the graph,

and they correspond to four Munsell chips, namely those with colors red, yellow

green and blue.

And this reminds us of something.

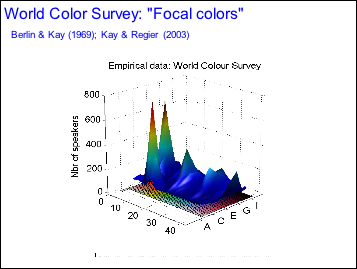

In the 1970's, two anthropologists at Berkeley, Brent Berlin

and Paul Kay, studied which colors people in different cultures have names for.

They found that there were certain colors that were very frequently given

names. Here is the graph showing the number of cultures in the so-called

"World Color Survey" which had a name for each of the different

Munsell chips.

And here we see something very surprising. The peaks in this

graph, derived from anthropological data, correspond very closely to the peeks

in the graph I just showed you of the singularity of the Munsell chips.

--

Here I have superimposed contour plots of the two previous

graphs. You see that the peaks of the black contour plots of the singularity

data correspond to within one chip of the anthropological data, shown as flat

colored areas.

As though those colors which tend to be given names, are

precisely those simple colors that

project incoming light into smaller dimensional subspace of the three

dimensional space of possible lights.

It's worth mentioning that Berlin and Kay, and more recently

Kay and Regier have been seeking explanations of their anthropological

findings. Though there are some current explanations based on a combination of

cultural and perceptual effects, which do a good job of explaining the boundaries

between different color names, no one up to

now has been able to explain the particular pattern of peaks of naming

probabibility, as we have here. And in particular, the red/green and

blue/yellow opponent channels proposed on the basis of Hering's findings do not

provide an explanation.

On the other hand it does seem reasonable that names should

most frequently be given to colors that are simple in the sense that when you move them around under

different illuminations, their reflections remain particularly stable compared

to other colors.

So in my opinion the finding that we are able to so

accurately predict color naming from first principles, using only the idea of

the sensorimotor approach, is a great victory for this approach.

--

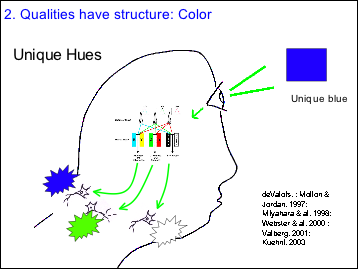

There is another quite independent victory of the

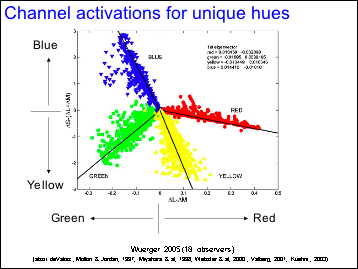

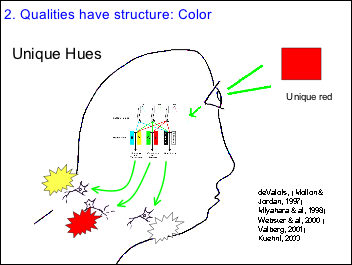

sensorimotor approach to color that concerns what are called unique hues. These are colors that are judged by people to be pure, in the sense that they contain no other colors.

There is pure red, green, yellow and blue, and people have measured the

wavelengths of monochromatic light which provide such pure sensations.

Unfortunately, the data are curiously variable, and seem to

have been changing gradually over the last 50 years. Furthermore, the data have

not been explained from neurophysiological red/green and yellow/blue opponent

channels.

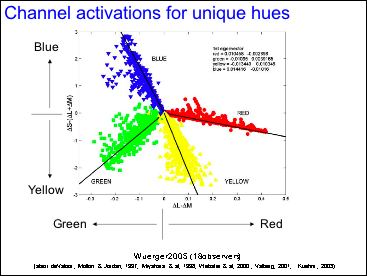

The dots in this graph show empirical data on channel

activations observed to obtain unique red, yellow, green and blue. Instead of

crossing at right angles, the data are somewhat skewed.

--

What this means is that, for example, in order to get the

sensation of absolutely pure red, you cannot just have the red channel that is

maximally active. You have to have a little bit of activation also in the

yellow channel.

--

Similarly, to get unique blue, you need not just activation

in the blue channel, but also some activation in the green channel.

--

On the other hand it is easy, on the basis of the matrices

that we have calculated for colored chips, to make predictions about what

people will judge to be pure lights. And

these predictions turn out to be right spot on the empirical data on observed

unique hues. In fact the black lines in the graph are the predictions from the

sensorimotor approach.

--

Another fact about unique hues is their variability. The

small colored triangles on the edge of the diagram here on the right shows the

wavelengths measured in a dozen or so different studies to correspond to unique

red, yellow, blue and green. You see the data are quite variable. The colored

lines are the predictions of variability proposed by the sensorimotor approach.

Again, the agreement is striking.

Incidentally we can also account for the fact that the data

on unique hues has been changing over recent years. We attribute this to the

idea that in order to make the passage from surfaces to lights, people must

have an idea of what they call natural white light. And this may have been

changing because of the transition from incandescent lighting to neon lighting

used more often today.

--

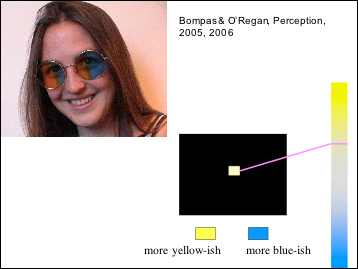

As a final point about color and the sensorimotor approach,

I'd like to mention some experiments being done by my ex PhD student Aline

Bompas. Here she is wearing what looks like trendy psychedelic spectacles.

The effect of these spectacles is to make it so that when

she looks to the right, everything is tinged with yellow, and when she looks to

the left, everything is tinged with blue. Now under the sensorimotor theory,

this is a sensorimotor dependency which the brain should learn and grow

accustomed to. After a while, we predict, people wearing such spectacles should

come to no longer see the color changes.

Furthemore, once people are adapted, if they then take off the spectacles, they

should then see things tinged the opposite way. For example if they look at a

grey spot on a computer screen, when they turn their head one way or the other

they should have to adjust the spot to be more yellowish or more blueish for it

to appear grey. And this should happen, despite the fact that they are not

wearing any spectacles at all.

Aline Bompas has been doing interesting experiments which do

indeed confirm this kind of predictions.

--

Another application of the sensorimotor approach to the qualities

of sensation concerns body sensation.

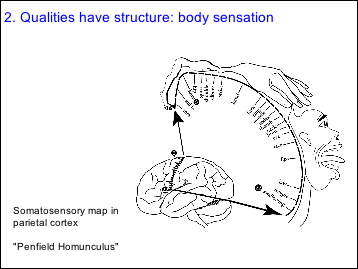

Why is it that when I touch you on the arm, you feel it on

your arm? You might think that the answer has something to do with the fact

that sensory stimulation is relayed up into the brain into a somatosensory map

in parietal cortex, where different body parts are represented, as in the

well-known Penfield homunculus.

But the fact is that this doesnŐt explain anything. The fact

that a certain brain area represents a certain body part doesnŐt explain what

it is about that brain area which gives you sensation in that particular body

part. What is it about the arm location in the somatosensory map which gives

you that "arm" feeling rather than, say, the foot feeling, or any

other feeling?

The sensorimotor approach claims on the contrary that what

constitutes the feel of touch on the arm is a set of potential changes that

could occur: the fact that when you move your arm when your arm is being touched, it changes the incoming tactile

stimulation, whereas when you move, say your foot when you're being touched on your arm doesnŐt change

anything. The fact that when you look at your arm when you're being touched on your arm, you're

likely to see something touching it, whereas if you look, say, at your foot,

you're not likely to see anything touching it.

What constitutes

that arm-feel is the set of all

such potential sensorimotor dependencies.

Now if this is true, it makes an interesting prediction. It

predicts that if we were to change the systematic dependencies, then we should

be able to change the associated feel. This is exactly what is done in the

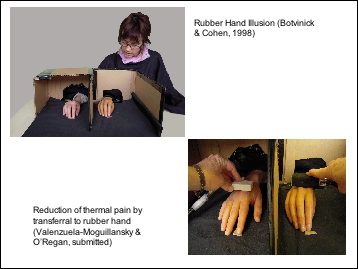

Rubber Hand Illusion.

--

In the RHI

a person watches a rubber hand being stroked while at the same time their own

real hand is stroked simultaneously. Most people after a few minutes get the

peculiar impression that the rubber hand belongs to them. This is measured by a

questionnaire and by a behavioural response, which is to indicate the felt

position of the index finger.

This result

is very much in keeping with the predictions of the sensorimotor approach. With

my student Camila Valenzuela-Moguillansky we are working on this phenomenon, in

particular with regard to pain. As shown in the lower figure, if we

simultaneously stimulate the real hand and the rubber hand with a painful heat

stimulus, when people have transferred ownership of their hand to the rubber

hand, they feel less pain in the real hand.

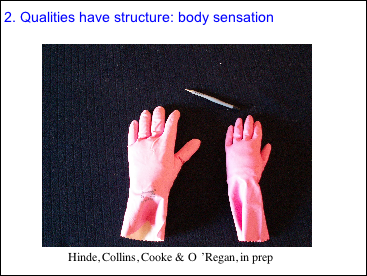

--

It's also possible to use different size rubber hands, as

here, and give people the impression that their real hands are bigger or

smaller than they really are.

--

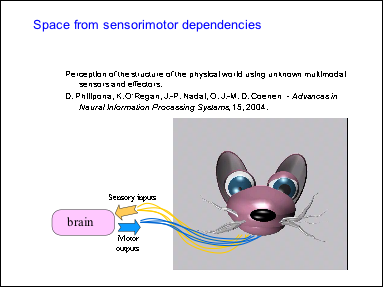

Yet another application of the sensorimotor approach I would

like to mention concerns the perception of space. In this work, done with

mathematician student David Philipona, we showed how it's possible for a brain

to deduce the algebraic group structure of three dimensional space by looking

at the sensorimotor laws linking sensory input to motor output. We did this for

the case of a simulated rat, which had eyes and head that moved, pupils that contracted.

The sensory input was multimodal and came from vision, audition and from

tactile input from the whiskers. We have been looking further into how this

approach might help in robotics for multimodal sensory fusion and calibration.

--

Up to now I've looked at how the sensorimotor approach can

deal with the ineffability and the structure of the qualities of feel.

Now let's look at the third mystery of feel, the question of

presence i.e. of why people say "there's something it's like" to have

a feel.

--

I had already mentioned the difficulty of really

understanding what it means to say that there's something it's like to feel,

and that to solve this problem we might use an operational definition and

proceed by contradiction. This could consist in noting that there are some

processes in the brain and nervous system like automatic functions, on the one

hand, and thoughts on the other hand, which presumably do not possess the mysterious "something it's

like".

The difficulty with the traditional way of thinking about

feel as being generated by the brain is

that there seems to be no way we could conceive of why autonomic and thought

mechanisms could generate no feel, whereas sensory mechanisms would.

Under the sensorimotor view, on the other hand, we are no

longer searching for physical mechanisms which generate feel. So instead of

searching for physical or physiological mechanisms that do or do not generate

the "something it's like", we can search for characteristics of

our interaction with the environment of

which one could say that they correspond to the notion of feeling like

something.

---

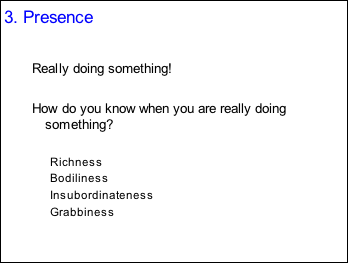

If you ask yourself, let's say in the case of feeling the

softness of a sponge why there's something it's like to do this, I think you

come to the conclusion that the reason there's something it's like is pretty

obvious: you really are doing

something, not just thinking about itÉ or letting your brain deal with it

automatically.

But then what is it about a real interaction with the world

that allows you to know that it you

really are having such a real interaction? How do you know, when you're

squishing a sponge, that you REALLY ARE squishing it, and not just thinking

about it, hallucinating or dreaming about it?

The answer I think lies with four aspects of real-world

interactions which are: Richness, bodiliness, insubordinateness and grabbiness.

--

First of all, the world is rich in details. There is so much

information in the world that you cannot possibly imagine it. If you're just

thinking about squishing a sponge, you cannot imagine all the different

possible things that might happen when you press here or there. If you're

imagining a visual scene, you need to rely on your own inventivity to imagine

all the details. But if you really are looking at a scene, then wherever you

look, the world provides infinite detail.

So richness is a first characteristic of real-world

interactions that distinguishes them from imagining or thinking about them.

--

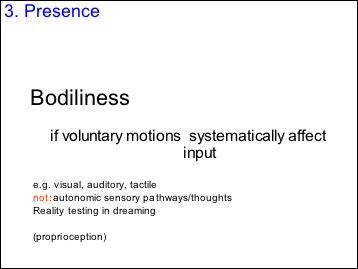

Bodiliness is the fact that voluntary motions of your body

systematically affect sensory input. This is an aspect of sensory interactions

which distinguishes them from autonomic processes in the nervous system and

from thoughts.

Sensory input deriving from visceral autonomic pathways is

not generally affected by your voluntary actions. Your digestion, your

heartbeat, the glucose in your blood, although they do depend somewhat on your

movements, are not as intimately linked to them as your sensory input from your

visual, auditory and tactile senses. If you are looking at a red patch and you

move your eyes, etc., then the sensory input changes dramatically. If you are

listening to a sound, any small movement of your head immediately changes the

sensory input to your ears in a systematic and lawful way. If you're thinking

about a red patch of color or about listening to a sound, then moving your

eyes, your head, your body, does not alter the thought.

Note that the idea that bodiliness should be a test of real

sensory interactions is related to the fact that people often say that a way of

testing whether you are dreaming is to make a voluntary action that has an

effect on the environment, like switching on a light.

But note now the interesting case of proprioception. Here is

a case where we definitely have bodiliness, since voluntary limb movements do

systematically affect incoming proprioception. On the other hand, I don't think

proprioception really is felt in the

same way that other sensory feels are felt.

Bodiliness by itself seems therefore not to be a guarantee

that a sensation will be felt.

--

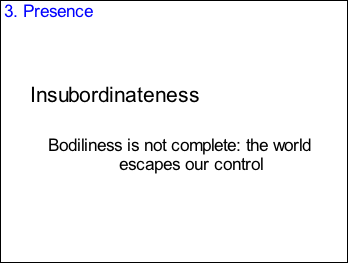

Indeed the reason bodiliness is not a perfect guarantee of a

sensation being real is that for it to be real, bodiliness must actually be incomplete. This is because what characterises sensations

coming from the world is the fact that precisely they are not completely

determined by our body motions. The world has a life of its own, and things may

happen: mice may move, bells may ring, without us doing anything to cause

this. I call this

insubordinateness. The world partially escapes our control.

--

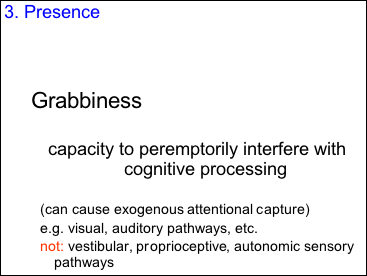

And then there is grabbiness. This is the fact that sensory

systems in humans and animals are hard-wired in such a way as to peremptorily

interfere with cognitive processing. What I mean is that when there is a sudden

flash or loud noise, we react, automatically by orienting our attention towards

the source of interruption. This fact is an objective fact about the way some

of our sensors -- namely precisely those that we say we feel, are wired up. Visual, auditory, tactile olfactory

and gustatory systems possess sudden change (or "transient"-)

detectors that are able to interrupt my ongoing cognitive activities and cause

an automatic orienting response. On the other hand a sudden change in my blood

sugar or in other autonomic pathways like a sudden vestibular or proprioceptive

change, will not cause exogenous orienting. Of course such changes may make me

fall over, or become weak, for example, but they do not directly prevent my

cognitive processing from going on more or less as normal -- although there may

be indirect effects of course through the fact that I fall over or become weak.

My idea is that what we call our real sense modalities are

precisely those that are genetically hard wired with transient detectors, so as

to be able, in cases of sudden change, to interrupt our normal cognitive

functioning and cause us to orient towards the change. Those other, visceral,

autonomic sensing pathways, are not wired up this way. It is as though normal

sense modalities can cause something like a cognitive "interrupt",

whereas other sensing in the nervous system cannot.

--

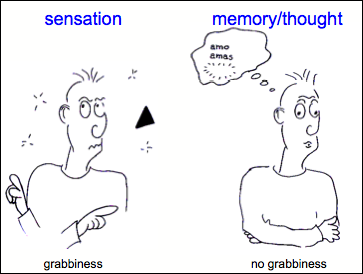

Note that grabbiness allows us also to understand why

thoughts are not perceived as real sensations. If you are seeing or hearing

something, any change in the environment immediately creates a signal in the

transient detectors and alerts you that something has happened. But imagine

that overnight neurons die in your brain that code the third person of the

latin verb "amo". Nothing wakes you up to tell you this has happened.

To know it, you have to actually think about whether you still remember the

third person of amo. In general, except in the case of obsessions, thoughts and

memory do not by themselves interrupt your cognitive processing in the way that

loud noises and sudden flashes or pungent smells cause automatic orienting.

--

Grabbiness is particularly important in providing sensory

feel with its "presence" or "what it's like". I would like

to illustrate this with the example of seeing.

When we see a visual scene, we have the impression of seeing

everything, simultaneously, continuously, and in all its rich, detailed

splendor. The visual scene imposes itself upon us as being "present".

Part of this presence comes from the richness, bodiliness and insubordinateness

provided by vision. The outside world is very detailed, much more so than any

imaginable scene. It has bodiliness because whenever we move our eyes or body,

the input to our eyes changes drastically. And it is insubordinate because our

own movements are not the only thing that can cause changes in input: all sorts

of external changes can also happen.

But there is also grabbiness. Usually, if something suddenly

changes in the visual scene, because transient detectors in the visual scene

automatically register it and orient your attention to it, you see the change,

as in this movie:

http://nivea.psycho.univ-paris5.fr/CBMovies/FarmsMovieFlickerNoblank.gif

--

But if you make the change so slow that the transient

detectors donŐt work, then an enormous change can happen in a scene without

your attention being drawn to it, like in this movie:

http://nivea.psycho.univ-paris5.fr/sol_Mil_cinepack.avi

Where almost a third of the picture changes without you

noticing it.

--

Another way of preventing transient detectors from

functioning normally is to flood them with additional transients, like here

where the many white "mudsplashes" prevent you noticing the transient

which corresponds to an important picture change.

http://nivea.psycho.univ-paris5.fr/CBMovies/ObeliskMudsplashMovie.gif

--

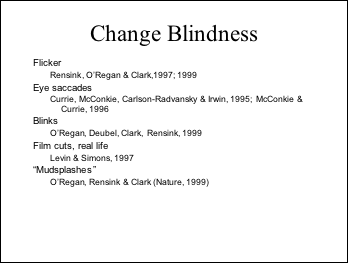

These 'slow change' and mudsplash demonstrations are part of

a whole literature on "change blindness". Change blindness can also

occur if the interruption between scenes that causes the transients to be

drowned out is caused by flicker in the image, or by eye saccades, blinks, or

film cuts, or even by real life interruptions.

--

So to summarize up to now:

I have shown how the new view of feel as a sensorimotor

interaction with the environment can explain the three mysteries of feel: its

ineffability, the structure of its qualities, its presence. These are all

explicable in terms of objective aspects of the sensorimotor laws that are

involved when we engage in a sensorimotor interaction with the environment.

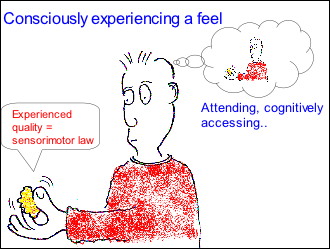

But these are all aspects of the QUALITY of feel. You may

note that I have not at all talked about how when you have a feel É

--

you can have the impression of consciously experiencing that

feel??

--

But I think this poses no theoretical problem. I would like

to claim that what we mean by consciously experiencing a feel is: cognitively

accessing the quality of the sensorimotor interaction we are currently engaged

in.

As an example, take the opposite case:

Take driving down the highway as you think of something else. When you do this you would not say you are in the process of experiencing the driving feeling.

For you to actually experience something you have to be

concentrating your attention on it, you have to be cognitively engaging in the

fact that you are exercising the particular sensorimotor interaction involved.

--

Illustrations of the role of attention in perception are

well known in psychology.

One very impressive, practical application of this is in the

domain of traffic safety. There is a phenomenon known to researchers studying

road accidents called "LBFTS": "Looked but failed to see".

It turns out that LBFTS is the second most frequent cause of road accidents

after drunken driving. The phenomenon consists in the fact that the driver is

looking straight at something, but for some reason doesn't see it.

Particularly striking cases of this occur at railway

crossings. You might think that the most frequent accident at a railway

crossing would be the driver trying to get across the track quickly right

before the trian comes through. But in fact it's found that the most frequent

cause of accidents at railway crossings is exactly the opposite: the train is

rolling quietly across the crossing and a driver comes up and, although he is

presumably looking straight ahead of him at the moving train, simply doesnŐt

see it, and crashes directly into it. If you do a search on the net for

"car strikes train" you'll find hundreds of examples in local newspapers

like this one.

--

This shows that what you look at does not determine what you

see. Here's another example: you may think it says here "The illusion of

seeing". Look again.

There are actually two "of"'s. Sometimes people

take minutes before they discover this.

The reason is that seeing is not : passively receiving

information on your retina. It is: interrogating what's on your retina and

making use of it. If your interrogation assumes there's only one word

"of", then you simply donŐt see that there are two.

If you're driving across the railway crossing, even though

your eyes are on the train, if you're thinking about something different, you

simply donŐt see the train, and É bang.

--

Psychologies are of course very interested in attention, and

do interesting experiments to test your ability to put your attention on

something when all sorts of other things are going on in the visual field.

Here's an example made by my ex student Malika Auvray, where

you have to follow the coin under the cup. It's a bit tricky because there are

lots of hands and cups all moving around: so concentrate!

http://nivea.psycho.univ-paris5.fr/demos/BONETO.MOV

At the end of the sequence : did you see anything bizarre?

It was the green pepper replacing one of the cups. Many

people donŐt notice this at all, presumably because they're busy following the

coin. And this is despite the fact that the green pepper is in full view and

perfectly obvious.

--

The demo I just showed is a poor version of a truly

wonderful demo made by Dan Simons, where a gorilla walks through a group of

people playing a ball game, and where you simply donŐt see the gorilla even

though it's in full view.

Transport for London has a reworked version of this that

they use as an advertisement for people to drive carefully, and you can find it

on youtube:

http://www.youtube.com/watch?v=Ahg6qcgoay4&NR=1&feature=fvwp

--

So in conclusion up to now:

Consciously experiencing a feel requires you first to be

engaged in the skill implied by that feel. If the skill has the properties that

sensory feel have, that is, if it has richness, bodiliness, insubordinateness

and grabbiness, then the quality it will have the sensory presence or

"what it's like" that real sensory feels possess.

If then you are attending, or cognitively accessing the

feel, you will be conscious that you are doing so.

But wait, there's a problem: who is "you"?!

It doesn't make much sense to say that a person or an agent

is consciously experiencing the feel, unless the person or agent exists as a

person, that is unless the agent has what we call a SELF.

--

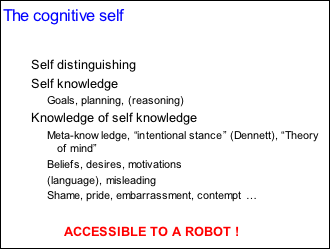

Is this a problem for science? Philosophers have looked

carefully at the problem posed by the notion of self and come to the conclusion

that though the problem is tricky, it is not a "hard" problem in the

same sense as the problem of feel was.

One aspect of the self is what could be called the cognitive

self, which involves a hierarchy of cognitive capacities.

At the simplest level is "self-distinguishing",

that is the ability for a system or organism to distinguish its body from the

outside world and from the bodies of other systems or organisms.

The next level is "self-knowledge". Self knowledge

in the very limited sense I mean here is something a bird or mouse displays as

it goes about its daily activities. The animal exhibits cognitive capacities

like purposive behavior, planning, and even a degree of reasoning. To do this its

brain must distinguish its body from the

world, and from other individuals. On the other hand the bird or mouse as

an individual presumably has no concept of

the fact that it is doing these things, nor that it even exists as an

individual.

Such knowledge of self-knowledge is situated at the next

level of my classification. Knowledge of self-knowledge can lead to subtle

strategies that an individual can employ to mislead another individual,

strategies that really are seen only in primates.

Knowledge of self-knowledge is most typically human, and may

have something to do with language. It underlies what philosopher Daniel

Dennett calls the "intentional stance" that humans adopt in their

interactions with other humans. The individual can have a "Theory of

Mind", that is, it can empathize with others, and interpret other

individuals' acts in terms of beliefs, desires and motivations. This gives rise

to finely graded social interactions ranging from selfishness to cooperation

and involving notions like shame, embarrassment, pride, and contempt.

I have called all these forms of the cognitive self

"cognitive" because they involve computations that seem to be within

the realm of symbol and concept manipulation.

There seems to be no conceptual difficulty involved in building these capacities

into a robot. It may be difficult today ((-- particularly as we don't know too

well how to make devices that can abstract concepts. Furthermore we don't

currently have many robot societies where high level meta knowledge of this

kind would be useful. ))

But ultimately I think the consensus is that there is no

logical obstruction ahead of us.

So I think we can say that the cognitive self isÉ

Accessible to a robot

--

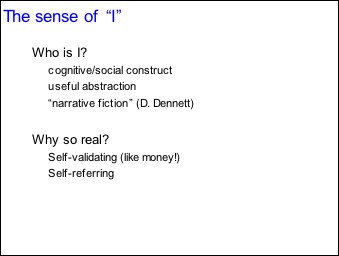

On the other hand there does still seem to be something

missing. We as humans have the strong impression that there is someone, namely

ourselves, "behind the commands". ((We are not just automata milling

around doing intelligent things: there is a pilot in the system, so to speak,

and that pilot is "I".))

It is I doing the thinking, acting, deciding and feeling.

How can the self seem so real to us, and who or what is the "I" that

has this impression?

And here I want to appeal to current research in social and

developmental psychology. Scientists in these fields agree that although we

have the intimate conviction that we are an individual with a single unified

self, the self is actually a construction with

different, more or less compatible facets that each of us gradually builds as

we grow up.

The idea is that the self is a useful abstraction that our

brains use to describe, first to others and then later to ourselves, the mental

states that "we" as individual entities in a social context have. It

is what Dennett has called a narrative fiction.

But then how can the self seem to us to be so real? The

reason is that seeming real is part of the narration that has been constructed.

The cognitive construction our brains have developed is a self-validating

construction whose primal characteristic is precisely that we should be

individually and socially convinced that it is real.

It's a bit like money: money is only bits of metal or paper.

It seems real to us because we are all convinced that it should be real. By

virtue of that self-validating fact, money actually becomes very real: indeed,

society in its current form would fall apart without it.

The self is actually even more real than money because it

has the additional property that it is self-referring: like some contemporary

novels, the "I" in the story is a fiction the "I" is

creating about itself.

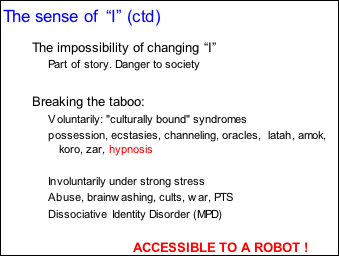

In which case, shouldn't we be able to change the story in

mid course? If our selves are really just "narrative fictions" then

we would expect them to be fairly easy to change, and by ourselves furthermore!

--

But actually this does not work. It is necessarily part of

the very construction of the social notion of self, that we must be convinced

that it is very difficult to change our selves. After all, society would fall

apart if people could change their personalities from moment to moment.

But couldn't we by force of will just mentally overcome this

taboo? If the self is really just a story, changing the self should surely in

fact be very easy.

It turns out that we can under some circumstances break the

taboo ((of thinking that our selves are impossible to change,))

and flip into altered states where we become different, or

even someone else. Such states can be obtained voluntarily through a variety of

Ňculturally boundÓ techniques like possession trances among others ((,

ecstasies, channeling provoked in religious cults, oracles, witchcraft,

shamanism, or other mystical experiences. Latah, amok, koro)) and hypnosis;

or sometimes involuntarily under strong psychological stress

((physical abuse, brainwashing by sects, in religious cults and in war -- Post

traumatic stress disorder, Dissociative Identity disorderÉ)).

Hypnosis is interesting because it is so easy to induce,

confirming the idea that the self is a story we can easily control if we could

only decide to break the taboo. Basic texts on hypnosis generally provide an

induction technique that can be used by a complete novice to hypnotize someone

else. This suggests that submitting to hypnosis is a matter of choosing to play

out a role that society has familiarized us with, namely "the role of

being hypnotised". It is a culturally accepted loophole in the taboo, a

loophole which allows people to explore a different story of "I". An

indication that it is truly cultural is that hypnosis only works in societies

where the notion is known. You can't hypnotise people unless they've heard of

hypnosis.

This is not to say that the hypnotic state is a pretense. On

the contrary, it is a convincing story to the hypnotized subject, just as

convincing as the normal story of ŇIÓ. So convincing, in fact, that clinicians

are using it more and more in their practices, for example in complementing or

replacing anesthesia in painful surgical operations.

There is also the fascinating case of Dissociative Identity

Disorder (formerly called Multiple Personality Disorder). A person with

Dissociative Identity Disorder may hear the voices of different ŇaltersÓ, and

may flip from ŇbeingÓ one or other of these people at any moment.

The different alters may or may not know of each othersŐ

existence. The surprising rise in incidence of Dissociative Identity Disorder

/MPD over the past decades signals that it is indeed a cultural phenomenon.

Under the view I am taking here, Dissociative Identity Disorder /MPD is a case

where an individual resorts to a culturally accepted ploy of splitting their

identity in order to cope with extreme psychological stress. Each of these

identities is as real as the other and as real as a normal personŐs identity

– since all are stories.

In summary, the rather troubling idea that the sense of self

is a social construction seems actually to be the mainstream view of the self

in social psychology.

If this view is correct, then we can confirm that there

really is logically no obstacle to us understanding the emergence of the self

in brains. Like the cognitive aspect of the self, the sense of "I" is

a kind of abstraction that we can envisage would emerge once a system has

sufficient cognitive capacities and was immersed in a society where such a

notion would be useful. The self is:

Accessible to a robot

--

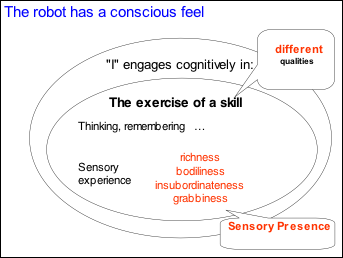

So we can now finally come to the conclusion. The idea is

that I have a conscious phenomenal experience when this social construct of

"I" engages cognitively in the exercise of a skill. If the skill is a

purely mental skill like thinking or remembering it will have no sensory

quality. But if it involves a sensorimotor interaction with the environment,

then it will have richness, bodiliness, insubordinateness, and grabbiness. In

that case it will have the "presence" or "what it is

likeness" of a sensory experience.

Notice that there are two different mechanisms involved

here. The outside part, the knowing part is a cognitive thing, it involves

cognitive processing, paying attention. There is nothing magical about this

however, it is simply a mechanism that brings cognitive processing to bear on

something so that that thing becomes available to one's rational activities, to

one's abilities to make decisions, judgments, possibly linguistic utterances

about something. It is perhaps what Ned Block calls access consciousness.

The inside part is the skill involved in a particular

experience. It is something that you do. Your brain knows how to do it, and has

mastery of the skill in the sense that it is tuned to the possible things that

might happen when it ...

The outside, cognitive part determines WHETHER you sense the

experience.

The inside, skill part, determines WHAT the experience is

like.

--

In summary, the standard view of what experience is supposes

that it is the brain that creates feel. This standard view leads to the

"hard" problem of explaining how physico-chemical mechanisms in the

brain might generate something psychological, out of the realm of physics and

chemistry. This explanatory gap arises because the language of physics and

chemistry is incommensurable with the language of psychology.

The sensorimotor view overcomes this problem by conceiving

of feel as a way of interacting with the environment. The quality of feel is

simply an objective quality of this way of interacting. The language with which

we describe such laws objectively and the language we use to describe our feels

are commensurable, because they are the same language. What we mean when we say there is something it's like to have a

feel, can be expressed in the objective terms of richness, bodiliness,

insubordinateness and grabbiness. What we mean when we say we feel softness or

redness can be expressed in terms of the objective properties of the

sensorimotor interaction we engage in when we feel softness or redness.

--

For further information, here is the address of my web site.